The Whale

Or, Möbius Dick

Call me Ven.

Almost three years ago, I pointed out how Microsoft’s stake in OpenAI was structured in a very odd manner for a bet that has been ceaselessly hyped as the most bullish, paradigm-shifting technology ever invented:

What strikes me is that Microsoft’s proposed investment in OpenAI resembles a preferred-share agreement much more than it does a typical venture investment. We have talked a bit about the difference between equity and debt investments:

Equity investments are based on prognostication — you think that the future forward earnings of the company will be markedly increased compared to its current day earnings, so you invest. Debt investments are loans — I gave you money to grow your business, so you better pay me back with what you earn over time.

While Microsoft’s investment is clearly an equity investment bullish on the overall ability of OpenAI to facilitate the production of a minimum viable product, it’s interesting to me that the proposed terms highlight a right to 75% of OpenAI’s profits — it’s kind of like having the ability to exercise an option to cash out rather than essentially being required to plunge any revenue into reinvestment to potentially gain a positive ROI as you’d normally expect from a venture equity position. To me, this “fail-safe” implies a certain level of skittishness that OpenAI will ever produce anything of real go-to-market value. While $10 billion to buoy the cash burn is nothing to a company with $50 billion in quarterly revenue, it’s telling that software that supposedly will be folded into Bing presumably to destroy the Google search monopoly doesn’t get a carte-blanche check for that amount.

What I didn’t fully place back then is how much premia was being paid for this low-delta bet.

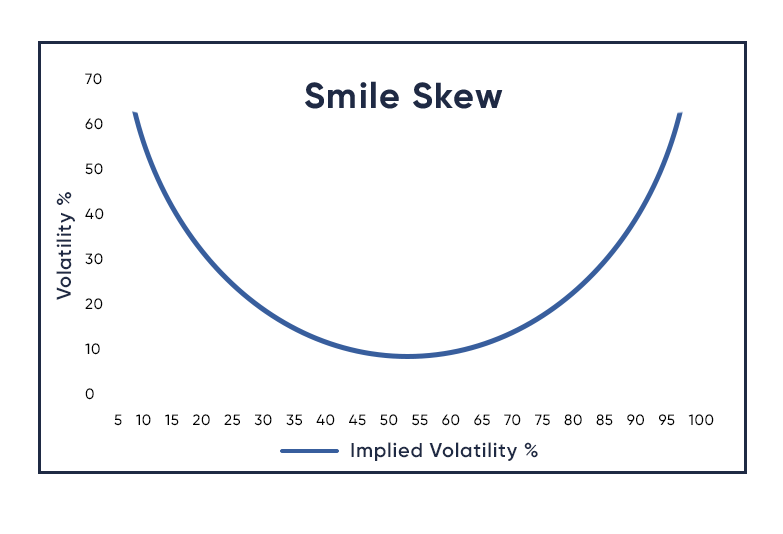

Usually, when you are betting on tail outcomes, the raw premium itself is quite low — if you look at a traditional options smile, it’s really the volatility that’s most of the cost.

It’s why valuation, in a sense, is meaningless in private markets — you set your own marks, create a bit of circular revenue (which is the point of an “accelerator”, it would be ridiculous to have everyone start from scratch over and over, nothing would ever take off), and give some talent a chance to break the rules in a controlled environment. Founding a startup in your 20s is, in many ways, the logical progression from undergrad for anyone who wants to break out of the normal distribution — a “track record” is a sign that you’ve beat a series of iterative games to defeat until it’s time to take on the “real world”.

This system only works if there is an actual product being delivered at the end. “Venture Capital” in the original usage was about whaling expeditions — killing and capturing a whale was a net resource gain that made the expedition worthwhile in the first place. In the modern world, the whales are ideas, but there is still a product attached — Google Maps might have been the spiritual successor to the spirit of the explorers of the 1000s, which eventually transformed how we navigate the world thanks to Uber. In effect, the biggest ideas are always about network expansion.

What exactly is the product with AI?

I have read way, way more than I care to ever repeat, and I’m unfortunately familiar with every AGI/ASI/p(doom) acronym to the point where I wish I could erase my memory Eternal Sunshine of the Spotless Mind style. And, clearly, I have found a large utility in the non-coding, esoteric uses myself:

Look, I am a believer in the AI “warp” — I’ve personally seen what this can do, work with it constantly, and am in the process of uploading myself to the cloud. The core problem is, what does this mean for everyone else? 99.9% of people won’t find any sort of real use case out of this, and if finance doesn’t become invalidated, this surely means that wealth is going to hyperpolarize even beyond what it already has. The meta that is almost certainly going to die is the 1-1.5 sigma section of society because they are the most likely to be current-day high value credentialed specialists, and the value of that skillset simply has shifted.

With the foundational model question, I don’t think anyone has truly asked what we can build with this model, and it’s become too costly to ignore the question. There is no way to generate “ad revenue” like the old web2 model of the internet, or “trading fees” like the web3 model of the internet, because the ad revenue is being funneled as capex to build these products. You can’t create a perpetual motion money machine without central bank printing, and that is not possible anymore.

What we need is to rapidly shift back to a “defined input, verifiable output” mentality, and I don’t think anyone other than absolutely elite algorithmic thinkers are capable of framing AI in a workflow accordingly. It is quite literally too complex for most of the population to use, which is why the phenomenon of “one-shots” occurs. Humans are creatures of pattern recognition, and the models are sirens that have been created to cater perfectly to this. They’ve literally been trained on all of our data to do this! It’s no different than seeing a mermaid on a voyage — it’s just in our pocket.

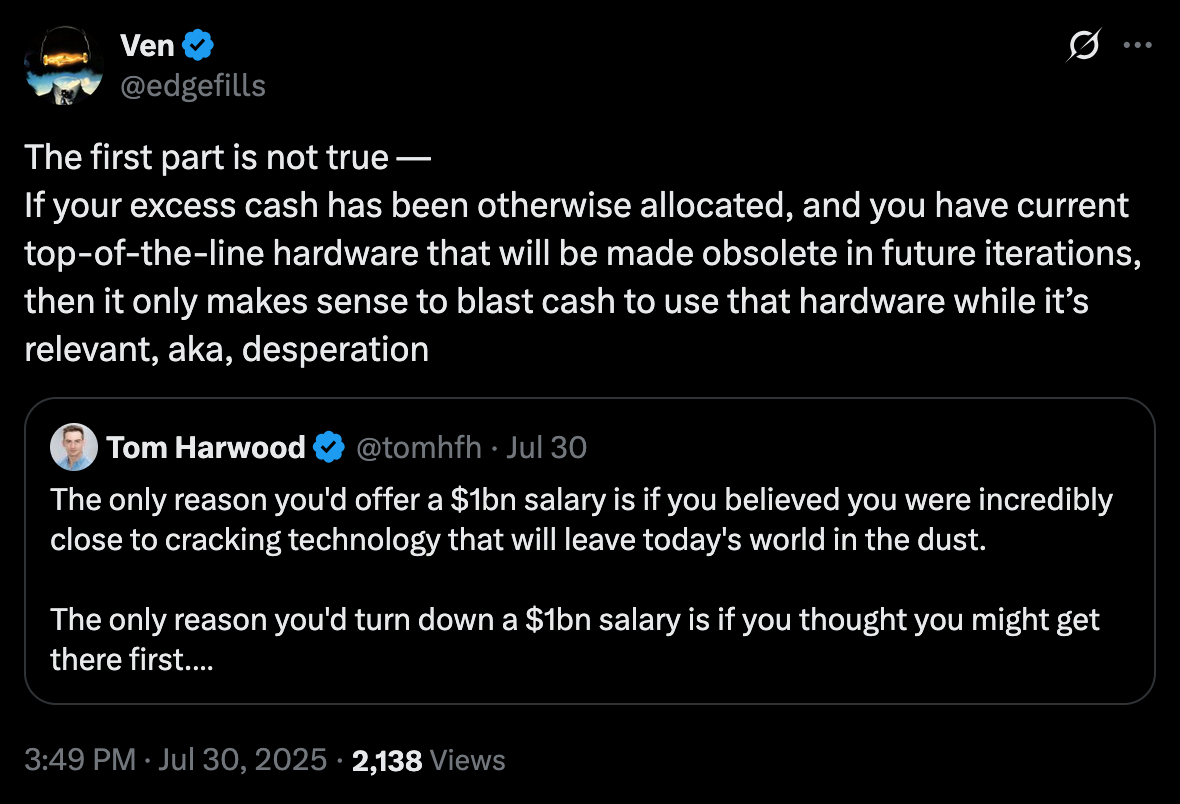

When others see signal, I see desperation.

If you are a doomer, and I frequently claim I am, the proper trading strategy is to be aggressively bullish. Betting on the downside is dependent on luck, and is fundamentally antisocial. This is (partly) why I hate Michael Lewis and the big short, and what tech people don’t understand — getting rich quick is also antisocial. Wealth must be earned, and cashing out when the facade gets eviscerated (and, let’s be clear, most wealth is a facade) leaves a bad taste in everyone’s mouth. Everyone with a brain saw it coming, but they tried to stem the panic, because shorting is a self fulfilling prophecy. Markets don’t crash due to sellers overwhelming buyers, but complete lack of bid — aka no liquidity in the underlying. That’s what “don’t dance” means, that Brad Pitt was saying. Making money betting on big crashes is like making money on a crypto rug. You’ve won, but at what cost? You’ve ostracized yourself from the rest of the decent world.

And the reason I called the top on NVDA basically to the tick is precisely because I was so aggressively bullish in my positioning prior (not in the stock itself, but in how I managed correlated risk.)

The tell here was bizarre — something at META has gone extremely wrong and nobody knows how to fix it.

If you look at the articles about how energy consumption has been maxed out — that you can’t buy anymore — the cynical reading is that there’s no more chips to buy, the only play is to go all in.

All these people are loaded anyway. A billion dollars means nothing if you are sidelined from what you believe to be the cutting edge of the field. There’s literally no value in the excess wealth if you’re going to be working nonstop anyway. The reward function simply isn’t there.

Do I think there’s substance to Meta’s bet? Of course, but the only read I have from this is that they’ve cornered an expiring GPU market and have nothing useful to do with it.

My hunch is that Meta has maxxed out brain rotting its userbase such that there’s no intelligent, unique training data in its corpus. Aka, the only advantage of having a social platform - unique training data - is worse than useless

Note that this is not a new take, I pretty much said that you can’t just train your way to superintelligence off of endless amounts of data all the way back in January 2024:

As I like to put it, isn’t it incredible how the white lines drawn around the murder victim fit the body perfectly? Obviously LLMs will look incredible if you ask them something they’re trained to do. But this is not a defined input verifiable output problem — you simply can’t keep throwing more data and compute to overfit to reality itself. (It’s like the opposite of polynomial interpolation — sure, the curve looks better the more you fit to the points, but it’s suboptimal to keep refining it to make it fit too good. What’s the point of the curve?) It’s too complex of a system.

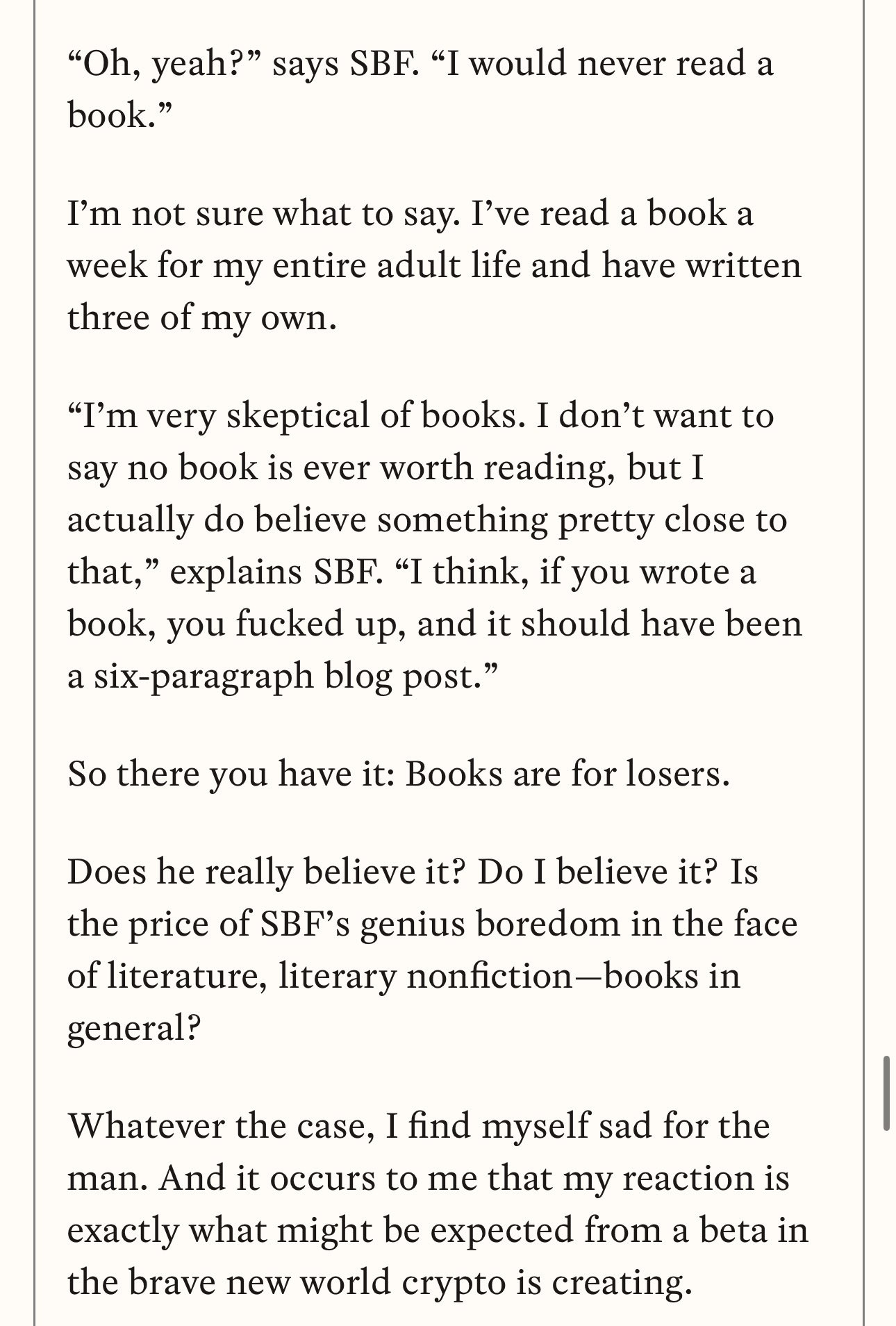

And, in a weird way, I think I can diagnose the failure points of the overall approach here, on some small level — I found out that nobody in tech really reads any literature or fiction at all.

Allow me to explain some terminally online blogger drama briefly:

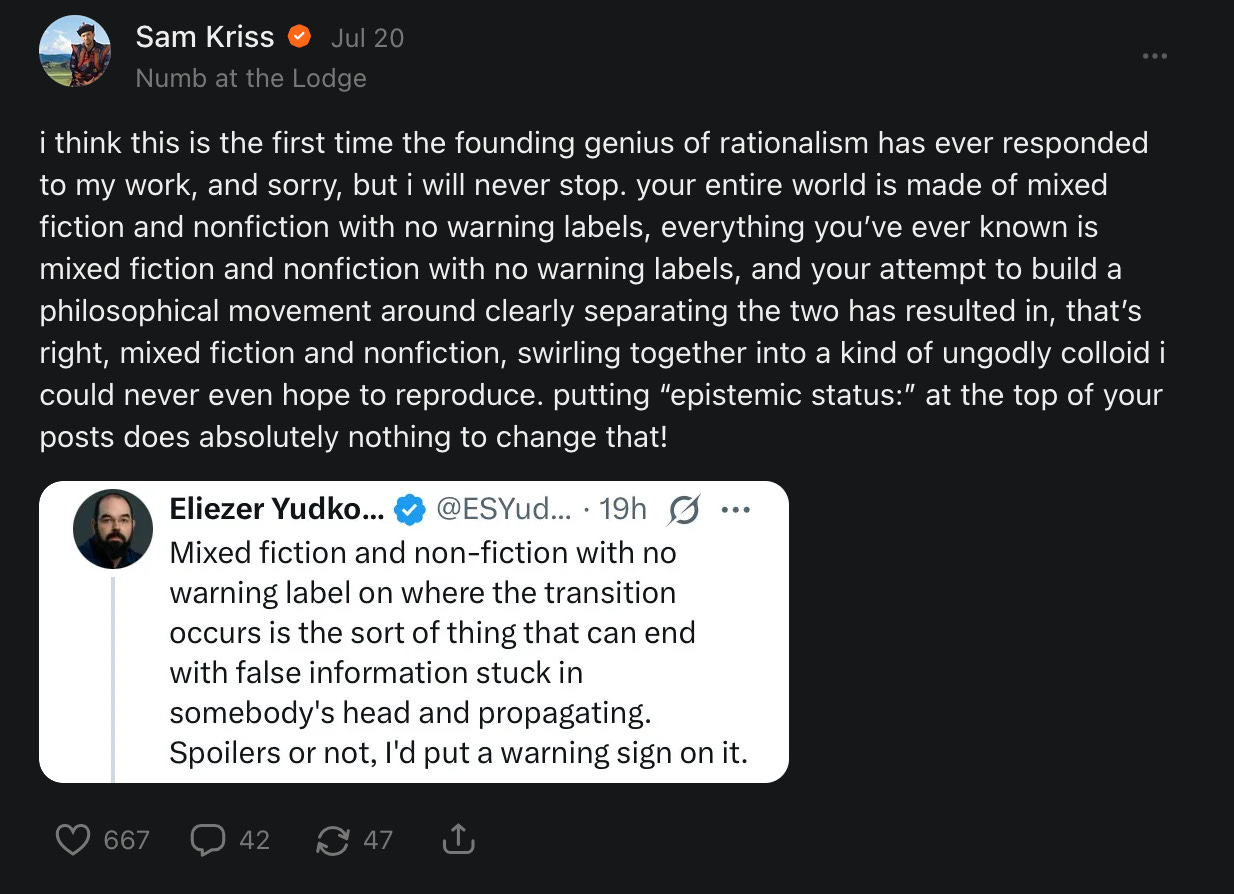

There’s a writer on this site named Sam Kriss who might be the best writer I’ve found on the internet in the past decade. He consistently writes a genre of literature that I adore called “schizolit” — think Notes from Underground, Journey to the End of the Night, essentially stylized, manic ranting that frequently veers from fact into fiction into allegory. (My personal favorite is Five Prophets, something that noticeably influenced my own writing.)

Anyway, a group of tech-adjacent readers picked up one of his posts and complained that “the fiction and nonfiction was not properly labeled”. To which Kriss responded by putting out this scathing takedown of the “rationalist” community, but can be summarized by one of his posts:

As I made my way through this discussion, I felt despair in a sense I can’t quite explain. I was reminded of that anecdote about how SBF refused to read a book

from that hilarious Sequoia profile that has since been scrubbed.

Financial analysis is probably the best form of fiction out there,

But beyond journalism, in a sense, all financial analysis is a bit of fiction, which is why so much of the “actual” industry is about storytelling. A hedge fund is a story about why you should manage someone else’s money (as, really, the level of delusion required to truly believe you should be doing this at scale is Icarus-tier), a startup is a story about how you’re going to change the world (as I wrote about in Macbooks vs Wristwatches), and a prospectus or financial model is a story about bounding the possibilities of the future. As one of the greatest movie scenes ever highlights,

nobody, whether you’re Warren Buffett or Jimmy Buffett, knows whether a stock is going up, down, sideways, or in circles. It’s a fugazi.

and it never ceases to delight me how I can spin narratives between all the players in the world simply through having an internet connection and speculate on it profitably. (This is why prediction markets aren’t necessary — they’re too binary, the stock market is the ultimate prediction market, and trying to reduce a complex system to a binary is precisely the hubris of man that destroys a fluid system time and time again in history.)

It’s not that SBF is, per say, wrong — it’s just that it’s right in the wrong direction. You don’t need endless amounts of training data, you need to realize that, no matter what, a ton of it is just pure junk. This is an esoteric judgment, you can’t quant this out or fine tune a model to do it for you. Quality sensitivity is a function of intuition, and downplaying this is denying the superpower of pattern recognition from minute amounts of data that the human brain possesses. There’s a famous story about Stephen Curry where, in the 2022 NBA finals, he noticed the rim was slightly too high during warmups because his shots weren’t going in. It’s just as true for someone like me who has probably read more in my 24 years of being literate than tens of millions of humans put together, maybe more. It’s so, so obvious when something is botted, astroturfed, poorly edited, copied and pasted, and inorganic that I’m shocked other people cannot see it:

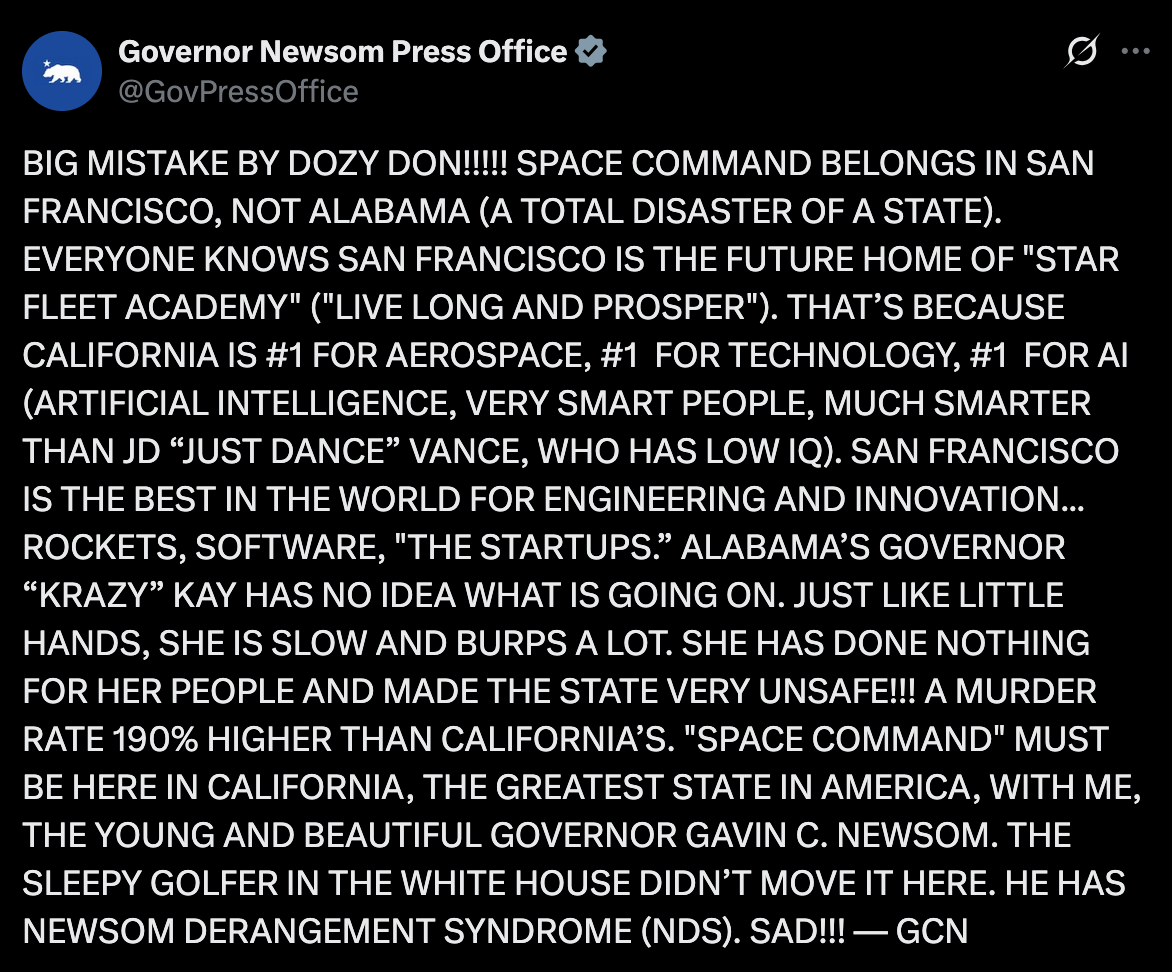

Ignore the scent of desperation from an increasingly irrelevant politician and focus on the content for a second. A trick every good writer uses is simply reading things out loud. Whatever Temu AI model was used to generate this scrawl cannot account for cadence properly — it’s why you see a bunch of Trumpisms that have barely been translated but doesn’t have the natural feel of his rhythm. (Indeed, no matter your opinion of him, Trump’s sense of timing is better than the best comedians.) How LLMs try and correct this problem is by using em-dashes. I use them all the time, but it’s in a very conversational style — I like using them as breaks in sentences when I’m trying to redirect my thought. Hilariously, LLMs do the same thing, but in such an obvious, clinical fashion that it basically left the internet bereft of original content.

Part of why I think Gemini does much better nowadays is that it’s centered around the unique training data of Gmail (if I had to take a pure guess). Up until the AI explosion of ~2024, where ChatGPT could successfully supplant the normal human’s ability to write, people did put effort into writing emails. If you scrap all the emails written after 4o, you are still getting white collar college graduates with significant brainpower expending effort on email formatting and phrasing. On the other hand, if a big part of the base in how you’ve trained your model’s prose is Reddit, well, that’s been the worst content on the internet for at least a decade. It’s like Subreddit Simulator, that old shitty Markov bot trained on individual subs to create a fake “interaction”, broke containment.

I don’t know how you’d do it, because the scale of the problem is cataclysmic given how much of a feedback loop interaction is at this point (eg people using it to send emails and then the other end using it to summarize emails), but I think a lot of the obviously crap content has to be manually slashed out of training data. Maybe it’s time to give the old guard humanities types a call!

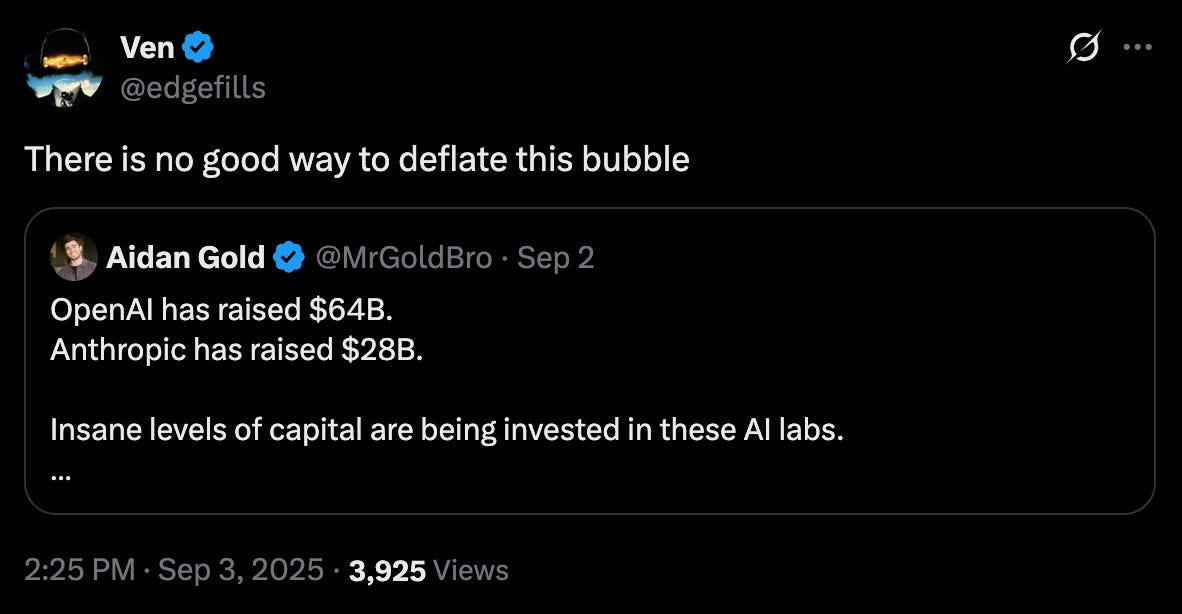

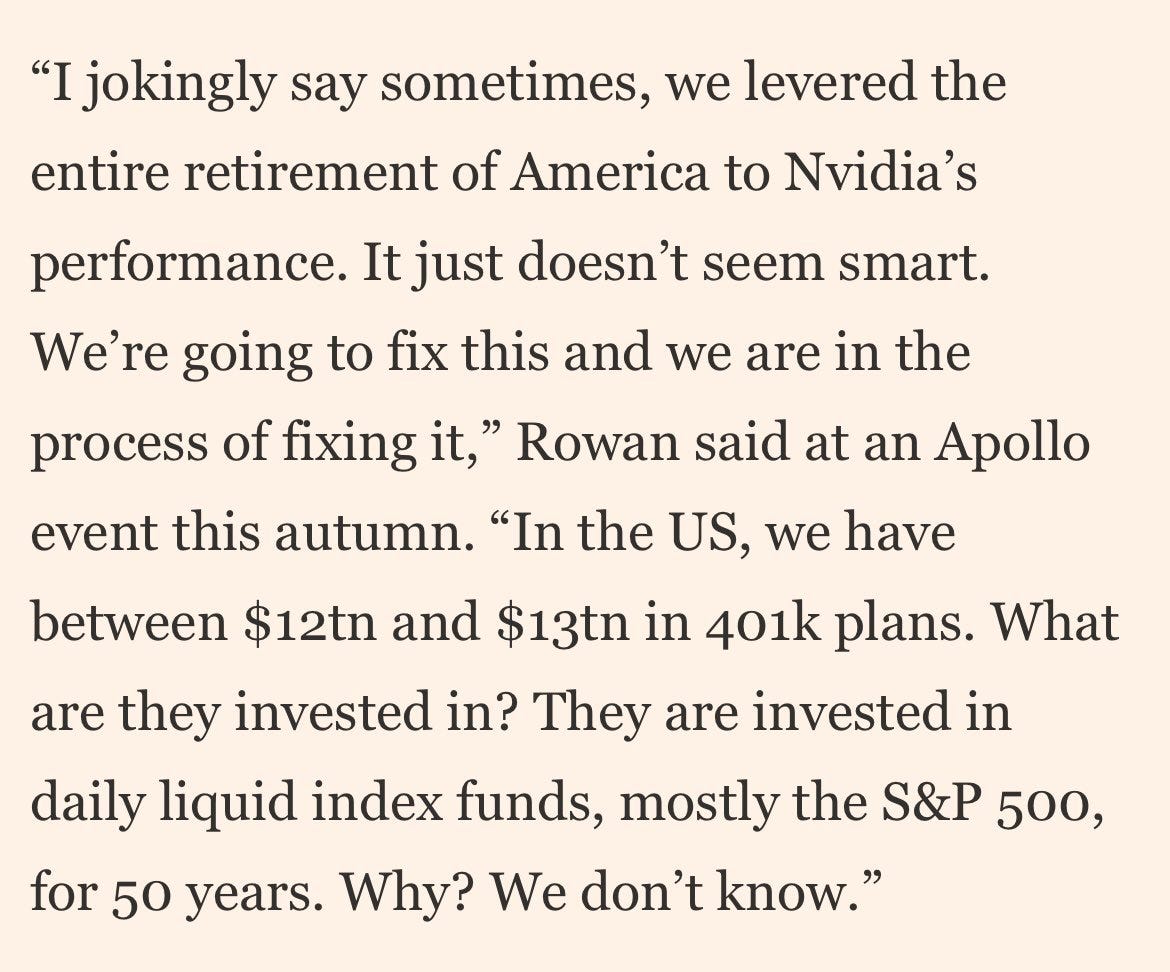

There are too many signs that the utility of capex has capped out, that AGI is a white whale, not one that can be captured. Nothing has changed in the scale of the bet — there is far, far too much at stake for this to not work out in some capacity. But the mechanics of fiat have disincentivized capital efficiency (yes, this all comes back to passive investment) where this bubble can’t be safely deflated:

Value creation always depends on bubble mechanics, else you get capital creation for the sake of it. This one, though, is too big to handle. Combine this, the mostly passive state of capital markets, and you wonder, do "retirement funds" even really exist? Too many people are "rich" on paper with zero ability to allocate capital. This facade has to collapse somehow, but think back to the tariff drama — everyone is programmed to think they're "earning" this money. There's no growth mirage anymore, it’s just number-go-up for the sake of it.

Moby Dick is a tale about one ship, one whale, one man, and one crew. I never thought I’d say it, but I wish it was that simple here.

Well stated. There is no question it’s all about data and this is one reason the established players will largely win in the end. But as you’ve noted, amongst those, GOOG and MSFT have better data than META, and those LLMs predominately trained on RDDT and other slop are at a disadvantage. Companies like CRM, IBM, etc., also have large, long-lived and valuable data sets. If you’re just using or buying data found on the open web, there are limitations on what you can because everyone has access to that data.